I sit behind a IPv4 only CGNAT which is very annoying for self-hosting stuff or using TunnelBroker for IPv6. Sure, there’s options like Cloudflare’s Tunnel for the self-hosted service stuff, but that’s a huge ask on the trust. It also doesn’t address the lower level networking needed for 6in4/6to4/6rd/etc.

An important and something I initially overlooked was choosing a VPS host that has low latency with the TB service. The lower ping time from the VPS to TB, the lower impact on your IPv6 internet experience. For example, if your ping time from your home to VPS is 50ms and the ping time from the VPS to TB is 30, you’re going to have a total IPv6 ping of at least 80ms from your home.

I like Wireguard and I already have a v4 Wireguard network up and running, so I’m using that as the starting point. I have tried using Wireguard for all traffic before from my router without much success (likely a skill issue). Plus I find the whole “allowed-ips” a huge hassle. So why not just overlay a VXLAN? That way, I could treat the thing like a direct link and route any traffic over it without needing to worry about those dang “allowed-ips” settings.

#/etc/netplan/100-muhplan.yml

network:

version: 2

tunnels:

# he-ipv6 configuration is provided by tunnel broker

# routes: is changed slightly to use a custom routing table.

# routing-policy is added to do source based routing so we don't interfere with host's own ipv6 connection

he-ipv6:

mode: sit

remote: x.x.x.x

local: y.y.y.y

addresses:

- dead:beef:1::2 # this endpoint's ipv6 address

routes:

- to: default

via: dead:beef:1::1 #the other end of the tunnel's ipv6 address

table: 205 #chose any id you want, doesn't really matter as long as it's not used.

on-link: True

routing-policy:

- from: dead:beef:1::2/128 #same as this endpoint

table: 205

- from: dead:beef:2::/64 # the routed /64 network.

table: 205

- from: dead:beef:3::/48 # the routed /48 if you choose to use it.

table: 205

- from: dead:beef:3::/48 # put /48 to /48 traffic into the main table (or whatever table you want)

to: dead:beef:3::/4

table: 254

priority: 10 #high priority to keep it "above" the others.

# setup a simple vxlan

# lets us skip the routing/firewall nightmare that wireguard can add to this mess

vxlan101:

mode: vxlan

id: 101

local: a.a.a.1 #local wg address

remote: a.a.a.2 #remote wg address (home router)

port: 4789

bridges:

vxbr0:

interfaces: [vxlan101]

addresses:

- dead:beef:2::1/64 #could be anything ipv6, but for mine I used the routed /64 network.

routes:

- to: dead:beef:3::/48

via: dead:beef:2::2 # home router

on-link: trueFiguring out where things were failing was tricky as I wasn’t sure if the issue was with my home firewall or my VPS. Pings to and from the VPS were working, but nothing going thru it worked. I thought I had the right ip6table rules in place but evidently I did not. Hmm

Reviewing my steps, I found I had forgotten all about net.ipv6.conf.all.forwarding! A quick sysctl got it working and adding a conf file to /etc/sysctl.d/ to make it survive reboots.

Ping traffic was flowing both ways, but trying to visit an v6 website like ip6.me would fail. Grabbed a copy of tshark and watched the traffic. It showed me the issue was with the VPS. Thankfully UFW has some logging that helped track down the issue was indeed with ip6tables and the output helped me write the necessary rules to allow traffic thru.

ip6tables -I FORWARD 1 -i he-ipv6 -d dead:beef:3::/48 -j ACCEPT;

ip6tables -I FORWARD 1 -o he-ipv6 -s dead:beef:3::/48 -j ACCEPT;Which allowed the traffic to flow and and out of the home network. However reboots are a problem. Thankfully UFW allows us to easily add the above rules so they survive a reboot.

ufw route allow in on he-ipv6 to dead:beef:3::/48

ufw route allow out on he-ipv6 from dead:beef:3::/48

#these don't seem to be needed? Default UFW firewall has ESTABLISHED,RELATED set.Of course after I started setting the static addresses and updating my local DNS, I realized I should have done a ULA prefix and used NPTv6 to make future migrations easier.

Improvements?

- I’d like to revisit using Wireguard without vxlans. It is another layer that can go wrong and may be something that isn’t needed and cuts down the maximum MTU.

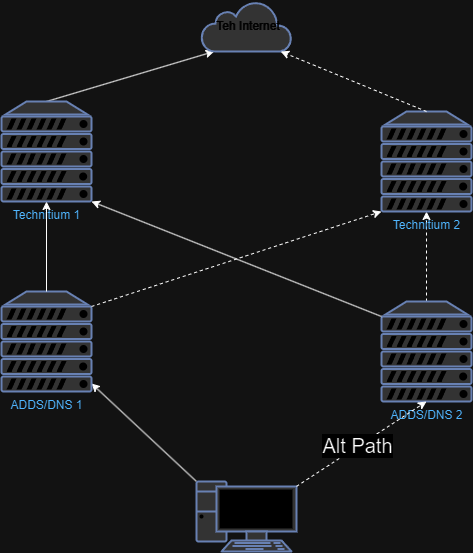

- If I ever got a second home internet connection, I’d like to aggregate traffic so that my effective home internet speed improves.

- Migrate NPTv6 up to the VPS.